18. Computing Square Roots#

18.1. Introduction#

Chapter 24 of [Russell, 2004] about early Greek mathematics and astronomy contains this fascinating passage:

The square root of 2, which was the first irrational to be discovered, was known to the early Pythagoreans, and ingenious methods of approximating to its value were discovered. The best was as follows: Form two columns of numbers, which we will call the

’s and the ’s; each starts with a . The next , at each stage, is formed by adding the last and the already obtained; the next is formed by adding twice the previous to the previous . The first 6 pairs so obtained are . In each pair, is or . Thus is nearly the square root of two, and at each fresh step it gets nearer. For instance, the reader may satisy himself that the square of is very nearly equal to .

This lecture drills down and studies this ancient method for computing square roots by using some of the matrix algebra that we’ve learned in earlier quantecon lectures.

In particular, this lecture can be viewed as a sequel to Eigenvalues and Eigenvectors.

It provides an example of how eigenvectors isolate invariant subspaces that help construct and analyze solutions of linear difference equations.

When vector

Invariant subspace methods are used throughout applied economic dynamics, for example, in the lecture Money Financed Government Deficits and Price Levels.

Our approach here is to illustrate the method with an ancient example, one that ancient Greek mathematicians used to compute square roots of positive integers.

18.2. Perfect squares and irrational numbers#

An integer is called a perfect square if its square root is also an integer.

An ordered sequence of perfect squares starts with

If an integer is not a perfect square, then its square root is an irrational number – i.e., it cannot be expressed as a ratio of two integers, and its decimal expansion is indefinite.

The ancient Greeks invented an algorithm to compute square roots of integers, including integers that are not perfect squares.

Their method involved

computing a particular sequence of integers

computing

deducing the desired square root from

In this lecture, we’ll describe this method.

We’ll also use invariant subspaces to describe variations on this method that are faster.

18.3. Second-order linear difference equations#

Before telling how the ancient Greeks computed square roots, we’ll provide a quick introduction to second-order linear difference equations.

We’ll study the following second-order linear difference equation

where

Equation (18.1) is actually an infinite number of linear equations in the sequence

There is one equation each for

We could follow an approach taken in the lecture on present values and stack all of these equations into a single matrix equation that we would then solve by using matrix inversion.

Note

In the present instance, the matrix equation would multiply a countably infinite dimensional square matrix by a countably infinite dimensional vector. With some qualifications, matrix multiplication and inversion tools apply to such an equation.

But we won’t pursue that approach here.

Instead, we’ll seek to find a time-invariant function that solves our difference equation, meaning

that it provides a formula for a

We seek an expression for

We call such a function

One way to discover a solution is to use a guess and verify method.

We shall begin by considering a special initial pair of initial conditions that satisfy

where

For initial condition that satisfy (18.3) equation (18.1) impllies that

We want

which we can rewrite as the characteristic equation

Applying the quadratic formula to solve for the roots of (18.6) we find that

For either of the two

provided that we set

The general solution of difference equation (18.1) takes the form

where

or

Sometimes we are free to choose the initial conditions

If we choose

If we choose

Soon we’ll relate the preceding calculations to components an eigen decomposition of a transition matrix that represents difference equation (18.1) in a very convenient way.

We’ll turn to that after we describe how Ancient Greeks figured out how to compute square roots of positive integers that are not perfect squares.

18.4. Algorithm of the Ancient Greeks#

Let

So

We want an algorithm to compute the square root of

If

If

Ancient Greeks used a recursive algorithm to compute square roots of integers that are not perfect squares.

The algorithm iterates on a second-order linear difference equation in the sequence

together with a pair of integers that are initial conditions for

First, we’ll deploy some techniques for solving the difference equations that are also deployed in Samuelson Multiplier-Accelerator.

The characteristic equation associated with difference equation (18.12) is

(Notice how this is an instance of equation (18.6) above.)

Factoring the right side of equation (18.13), we obtain

where

for

These two special values of

By applying the quadratic formula to solve for the roots the characteristic equation (18.13), we find that

Formulas (18.15) indicate that

Ancient Greeks had an indirect way of exploiting this fact to compute square roots of a positive integer.

They did this by starting from particular initial conditions

Solutions of difference equation (18.12) take the form

where

System (18.16) of simultaneous linear equations will play a big role in the remainder of this lecture.

Since

Thus,

However, notice that if

so that

Actually, if

so that convergence is immediate and there is no need to take limits.

Symmetrically, if

so again, convergence is immediate, and we have no need to compute a limit.

System (18.16) of simultaneous linear equations can be used in various ways.

we can take

we can instead take

Notice how we used the second approach above when we set

In taking this second approach, we constructed an invariant subspace of

Here is what is going on.

For

But for some special initial conditions

These special initial conditions require that

We’ll study these special initial conditions soon.

But first let’s write some Python code to iterate on equation (18.12) starting from an arbitrary

18.5. Implementation#

We now implement the above algorithm to compute the square root of

In this lecture, we use the following import:

import numpy as np

import matplotlib.pyplot as plt

def solve_λs(coefs):

# Calculate the roots using numpy.roots

λs = np.roots(coefs)

# Sort the roots for consistency

return sorted(λs, reverse=True)

def solve_η(λ_1, λ_2, y_neg1, y_neg2):

# Solve the system of linear equation

A = np.array([

[1/λ_1, 1/λ_2],

[1/(λ_1**2), 1/(λ_2**2)]

])

b = np.array((y_neg1, y_neg2))

ηs = np.linalg.solve(A, b)

return ηs

def solve_sqrt(σ, coefs, y_neg1, y_neg2, t_max=100):

# Ensure σ is greater than 1

if σ <= 1:

raise ValueError("σ must be greater than 1")

# Characteristic roots

λ_1, λ_2 = solve_λs(coefs)

# Solve for η_1 and η_2

η_1, η_2 = solve_η(λ_1, λ_2, y_neg1, y_neg2)

# Compute the sequence up to t_max

t = np.arange(t_max + 1)

y = (λ_1 ** t) * η_1 + (λ_2 ** t) * η_2

# Compute the ratio y_{t+1} / y_t for large t

sqrt_σ_estimate = (y[-1] / y[-2]) - 1

return sqrt_σ_estimate

# Use σ = 2 as an example

σ = 2

# Encode characteristic equation

coefs = (1, -2, (1 - σ))

# Solve for the square root of σ

sqrt_σ = solve_sqrt(σ, coefs, y_neg1=2, y_neg2=1)

# Calculate the deviation

dev = abs(sqrt_σ-np.sqrt(σ))

print(f"sqrt({σ}) is approximately {sqrt_σ:.5f} (error: {dev:.5f})")

sqrt(2) is approximately 1.41421 (error: 0.00000)

Now we consider cases where

# Compute λ_1, λ_2

λ_1, λ_2 = solve_λs(coefs)

print(f'Roots for the characteristic equation are ({λ_1:.5f}, {λ_2:.5f}))')

Roots for the characteristic equation are (2.41421, -0.41421))

# Case 1: η_1, η_2 = (0, 1)

ηs = (0, 1)

# Compute y_{t} and y_{t-1} with t >= 0

y = lambda t, ηs: (λ_1 ** t) * ηs[0] + (λ_2 ** t) * ηs[1]

sqrt_σ = 1 - y(1, ηs) / y(0, ηs)

print(f"For η_1, η_2 = (0, 1), sqrt_σ = {sqrt_σ:.5f}")

For η_1, η_2 = (0, 1), sqrt_σ = 1.41421

# Case 2: η_1, η_2 = (1, 0)

ηs = (1, 0)

sqrt_σ = y(1, ηs) / y(0, ηs) - 1

print(f"For η_1, η_2 = (1, 0), sqrt_σ = {sqrt_σ:.5f}")

For η_1, η_2 = (1, 0), sqrt_σ = 1.41421

We find that convergence is immediate.

Next, we’ll represent the preceding analysis by first vectorizing our second-order difference equation (18.12) and then using eigendecompositions of an associated state transition matrix.

18.6. Vectorizing the difference equation#

Represent (18.12) with the first-order matrix difference equation

or

where

Construct an eigendecomposition of

where columns of

The eigenvalues can be ordered so that

Write equation (18.12) as

Now we implement the algorithm above.

First we write a function that iterates

def iterate_M(x_0, M, num_steps, dtype=np.float64):

# Eigendecomposition of M

Λ, V = np.linalg.eig(M)

V_inv = np.linalg.inv(V)

# Initialize the array to store results

xs = np.zeros((x_0.shape[0],

num_steps + 1))

# Perform the iterations

xs[:, 0] = x_0

for t in range(num_steps):

xs[:, t + 1] = M @ xs[:, t]

return xs, Λ, V, V_inv

# Define the state transition matrix M

M = np.array([

[2, -(1 - σ)],

[1, 0]])

# Initial condition vector x_0

x_0 = np.array([2, 2])

# Perform the iteration

xs, Λ, V, V_inv = iterate_M(x_0, M, num_steps=100)

print(f"eigenvalues:\n{Λ}")

print(f"eigenvectors:\n{V}")

print(f"inverse eigenvectors:\n{V_inv}")

eigenvalues:

[ 2.41421356 -0.41421356]

eigenvectors:

[[ 0.92387953 -0.38268343]

[ 0.38268343 0.92387953]]

inverse eigenvectors:

[[ 0.92387953 0.38268343]

[-0.38268343 0.92387953]]

Let’s compare the eigenvalues to the roots (18.15) of equation (18.13) that we computed above.

roots = solve_λs((1, -2, (1 - σ)))

print(f"roots: {np.round(roots, 8)}")

roots: [ 2.41421356 -0.41421356]

Hence we confirmed (18.17).

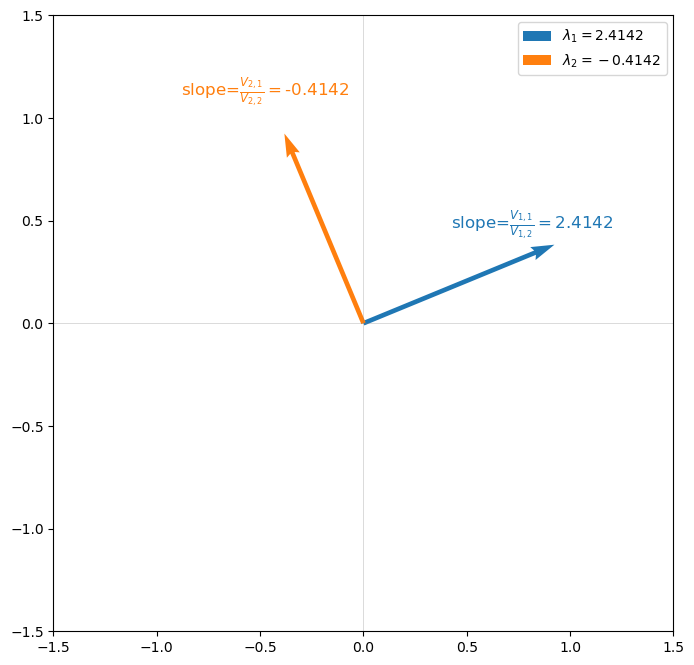

Information about the square root we are after is also contained in the two eigenvectors.

Indeed, each eigenvector is just a two-dimensional subspace of

that we encountered above in equation (18.8) above.

In equation (18.18), the

The following graph verifies this for our example.

Show source

# Plotting the eigenvectors

plt.figure(figsize=(8, 8))

plt.quiver(0, 0, V[0, 0], V[1, 0], angles='xy', scale_units='xy',

scale=1, color='C0', label=fr'$\lambda_1={np.round(Λ[0], 4)}$')

plt.quiver(0, 0, V[0, 1], V[1, 1], angles='xy', scale_units='xy',

scale=1, color='C1', label=fr'$\lambda_2={np.round(Λ[1], 4)}$')

# Annotating the slopes

plt.text(V[0, 0]-0.5, V[1, 0]*1.2,

r'slope=$\frac{V_{1,1}}{V_{1,2}}=$'+f'{np.round(V[0, 0] / V[1, 0], 4)}',

fontsize=12, color='C0')

plt.text(V[0, 1]-0.5, V[1, 1]*1.2,

r'slope=$\frac{V_{2,1}}{V_{2,2}}=$'+f'{np.round(V[0, 1] / V[1, 1], 4)}',

fontsize=12, color='C1')

# Adding labels

plt.axhline(0, color='grey', linewidth=0.5, alpha=0.4)

plt.axvline(0, color='grey', linewidth=0.5, alpha=0.4)

plt.legend()

plt.xlim(-1.5, 1.5)

plt.ylim(-1.5, 1.5)

plt.show()

18.7. Invariant subspace approach#

The preceding calculation indicates that we can use the eigenvectors

We’ll pursue that possibility now.

Define the transformed variables

Evidently, we can recover

The following notations and equations will help us.

Let

Notice that it follows from

that

and

These equations will be very useful soon.

Notice that

To deactivate

This can be achieved by setting

To deactivate

This can be achieved by setting

Let’s verify (18.19) and (18.20) below

To deactivate

xd_1 = np.array((x_0[0],

V[1,1]/V[0,1] * x_0[0]),

dtype=np.float64)

# Compute x_{1,0}^*

np.round(V_inv @ xd_1, 8)

array([-0. , -5.22625186])

We find

Now we deactivate

xd_2 = np.array((x_0[0],

V[1,0]/V[0,0] * x_0[0]),

dtype=np.float64)

# Compute x_{2,0}^*

np.round(V_inv @ xd_2, 8)

array([2.1647844, 0. ])

We find

# Simulate with muted λ1 λ2.

num_steps = 10

xs_λ1 = iterate_M(xd_1, M, num_steps)[0]

xs_λ2 = iterate_M(xd_2, M, num_steps)[0]

# Compute ratios y_t / y_{t-1}

ratios_λ1 = xs_λ1[1, 1:] / xs_λ1[1, :-1]

ratios_λ2 = xs_λ2[1, 1:] / xs_λ2[1, :-1]

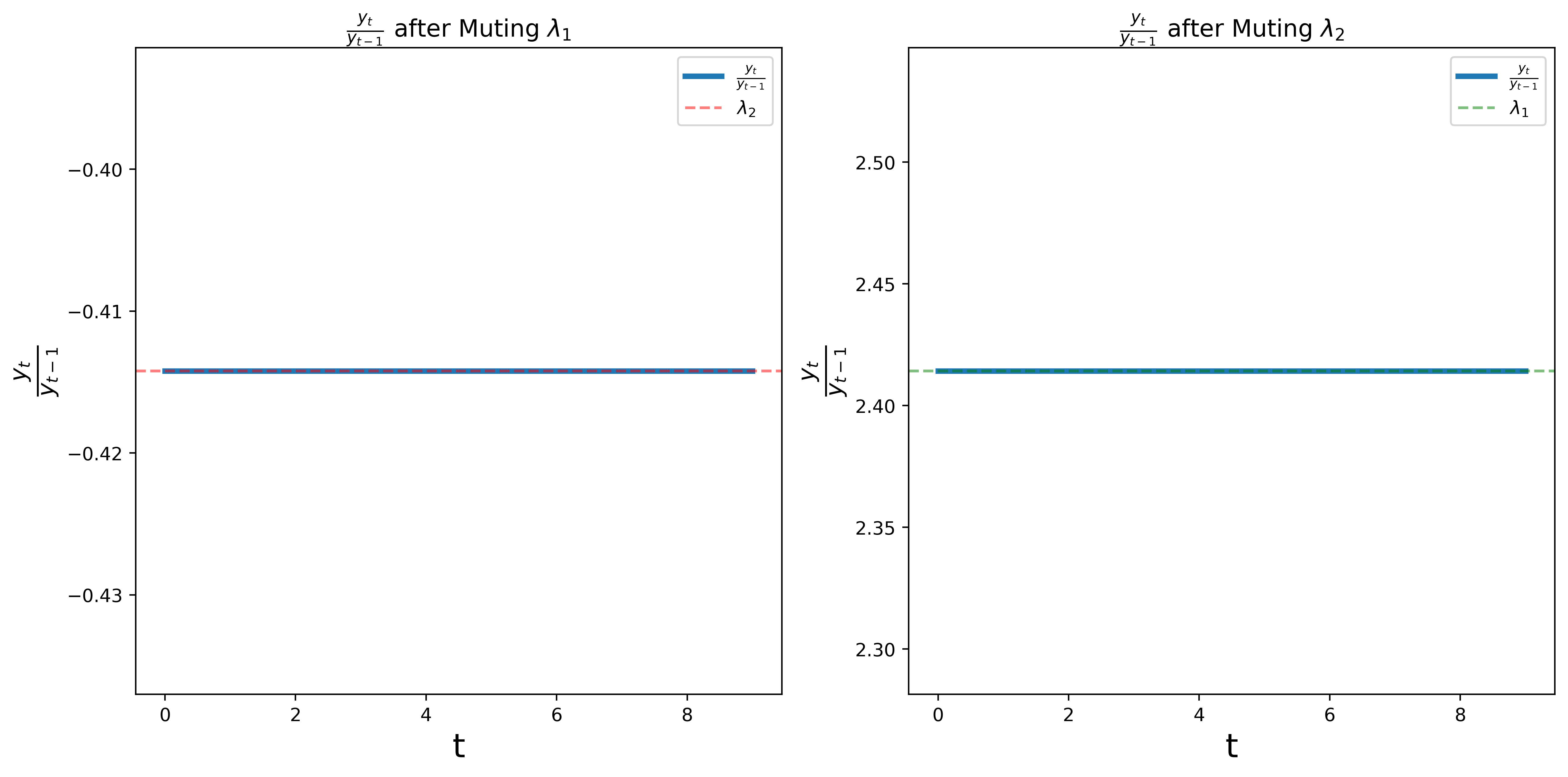

The following graph shows the ratios

We find that the ratios converge to

Show source

# Plot the ratios for y_t / y_{t-1}

fig, axs = plt.subplots(1, 2, figsize=(12, 6), dpi=500)

# First subplot

axs[0].plot(np.round(ratios_λ1, 6),

label=r'$\frac{y_t}{y_{t-1}}$', linewidth=3)

axs[0].axhline(y=Λ[1], color='red', linestyle='--',

label=r'$\lambda_2$', alpha=0.5)

axs[0].set_xlabel('t', size=18)

axs[0].set_ylabel(r'$\frac{y_t}{y_{t-1}}$', size=18)

axs[0].set_title(r'$\frac{y_t}{y_{t-1}}$ after Muting $\lambda_1$',

size=13)

axs[0].legend()

# Second subplot

axs[1].plot(ratios_λ2, label=r'$\frac{y_t}{y_{t-1}}$',

linewidth=3)

axs[1].axhline(y=Λ[0], color='green', linestyle='--',

label=r'$\lambda_1$', alpha=0.5)

axs[1].set_xlabel('t', size=18)

axs[1].set_ylabel(r'$\frac{y_t}{y_{t-1}}$', size=18)

axs[1].set_title(r'$\frac{y_t}{y_{t-1}}$ after Muting $\lambda_2$',

size=13)

axs[1].legend()

plt.tight_layout()

plt.show()

18.8. Concluding remarks#

This lecture sets the stage for many other applications of the invariant subspace methods.

All of these exploit very similar equations based on eigen decompositions.

We shall encounter equations very similar to (18.19) and (18.20) in Money Financed Government Deficits and Price Levels and in many other places in dynamic economic theory.

18.9. Exercise#

Exercise 18.1

Please use matrix algebra to formulate the method described by Bertrand Russell at the beginning of this lecture.

Define a state vector

Formulate a first-order vector difference equation for

Use the system

Compute the eigenvectors and eigenvalues of

Solution to Exercise 18.1

Here is one soluition.

According to the quote, we can formulate

with

By (18.21), we can write matrix

Then

# Define the matrix A

A = np.array([[1, 1],

[2, 1]])

# Initial vector x_0

x_0 = np.array([1, 1])

# Number of iterations

n = 6

# Generate the sequence

xs = np.array([x_0])

x_t = x_0

for _ in range(1, n):

x_t = A @ x_t

xs = np.vstack([xs, x_t])

# Print the sequence

for i, (a_t, b_t) in enumerate(xs):

print(f"Iter {i}: a_t = {a_t}, b_t = {b_t}")

# Compute eigenvalues and eigenvectors of A

eigenvalues, eigenvectors = np.linalg.eig(A)

print(f'\nEigenvalues:\n{eigenvalues}')

print(f'\nEigenvectors:\n{eigenvectors}')

Iter 0: a_t = 1, b_t = 1

Iter 1: a_t = 2, b_t = 3

Iter 2: a_t = 5, b_t = 7

Iter 3: a_t = 12, b_t = 17

Iter 4: a_t = 29, b_t = 41

Iter 5: a_t = 70, b_t = 99

Eigenvalues:

[ 2.41421356 -0.41421356]

Eigenvectors:

[[ 0.57735027 -0.57735027]

[ 0.81649658 0.81649658]]